NVIDIA DGX: The Ultimate AI Supercomputer for Deep Learning and HPC

Introduction to NVIDIA DGX

Artificial Intelligence (AI) and High-Performance Computing (HPC) have revolutionized numerous industries, from healthcare and finance to autonomous vehicles and natural language processing. The NVIDIA DGX system is at the forefront of this revolution, offering enterprises and researchers a powerful platform for AI model training and data analytics. This article explores the capabilities, advantages, and use cases of NVIDIA DGX, demonstrating why it is a leading choice for AI-driven workloads.

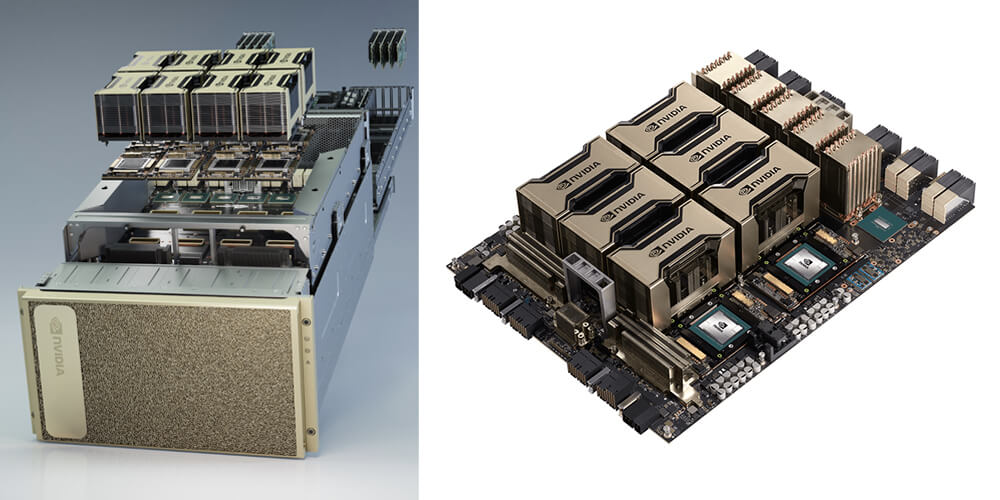

What is NVIDIA DGX?

The NVIDIA DGX is a series of AI supercomputers designed to accelerate deep learning and HPC applications. These systems are built with cutting-edge NVIDIA GPUs, high-speed interconnects, and an optimized AI software stack to deliver unparalleled performance for AI training and inference tasks. Whether for research institutions, startups, or large enterprises, NVIDIA DGX provides a robust infrastructure to handle complex AI workloads efficiently.

Key Features of NVIDIA DGX

1. State-of-the-Art GPU Architecture

Each NVIDIA DGX system is equipped with high-performance NVIDIA GPUs, such as the NVIDIA A100 Tensor Core GPUs. These GPUs offer:

- Multi-Instance GPU (MIG) technology for enhanced efficiency.

- Third-generation Tensor Cores for accelerated AI computations.

- NVLink and NVSwitch interconnects for high-speed communication between GPUs.

2. Optimized Software Stack

NVIDIA DGX systems come with an optimized AI software stack that includes:

- NVIDIA CUDA, enabling efficient parallel computing.

- NVIDIA AI Enterprise Suite, offering pre-configured AI and ML frameworks.

- NGC Catalog, providing access to AI models, containers, and SDKs.

3. High-Speed Networking and Storage

- InfiniBand support ensures ultra-fast data transfer for large-scale AI workloads.

- High-performance SSD storage optimizes data access and model training speed.

- Scalability allows multiple DGX systems to be connected into an AI supercomputer.

NVIDIA DGX Models

1. NVIDIA DGX Station

Designed for data scientists and AI developers, the NVIDIA DGX Station provides workstation-level performance without requiring a data center. It is ideal for small AI teams or organizations looking for an AI development platform with minimal setup requirements.

2. NVIDIA DGX A100

A widely used AI system, the NVIDIA DGX A100 is designed for training, inference, and data analytics. It features eight NVIDIA A100 GPUs with 320GB of memory, making it a powerhouse for handling large-scale AI workloads.

3. NVIDIA DGX H100

The latest in the DGX series, the NVIDIA DGX H100, takes AI acceleration to the next level. It is built with NVIDIA H100 Tensor Core GPUs, which offer:

- Up to 4X AI performance improvement over the A100 model.

- FP8 precision for optimized deep learning workloads.

- NVLink interconnects for seamless multi-GPU operations.

4. NVIDIA DGX SuperPOD

For enterprises requiring AI infrastructure at scale, the NVIDIA DGX SuperPOD offers an entire AI data center solution. It consists of multiple DGX systems interconnected via NVIDIA networking solutions, delivering petaflop-scale AI computing power.

Benefits of Using NVIDIA DGX

1. Unmatched AI Performance

With industry-leading GPUs and optimized software, NVIDIA DGX enables rapid training and inference of AI models, reducing development cycles from weeks to days.

2. Scalability and Flexibility

Organizations can start with a single DGX system and scale up to a full DGX SuperPOD, adapting to growing AI demands without major infrastructure changes.

3. Enterprise-Ready AI Infrastructure

NVIDIA DGX is designed to integrate seamlessly into enterprise IT environments, supporting containerized AI workloads and cloud-native deployments.

4. Cost and Energy Efficiency

Although DGX systems are high-end investments, they optimize computational efficiency, reducing overall energy costs and operational expenses in large-scale AI projects.

Use Cases of NVIDIA DGX

1. Healthcare and Life Sciences

NVIDIA DGX is widely used in healthcare for:

- Medical image analysis (e.g., MRI and CT scan processing).

- Drug discovery using AI-powered simulations.

- Genomic sequencing for personalized medicine.

2. Autonomous Vehicles

Self-driving car manufacturers leverage NVIDIA DGX for training neural networks that process real-world driving data, enabling advancements in autonomous vehicle safety and decision-making.

3. Finance and Trading

Financial institutions use NVIDIA DGX for predictive analytics, fraud detection, and algorithmic trading, benefiting from real-time AI-driven insights.

4. Natural Language Processing (NLP)

With NVIDIA DGX, companies can train large language models like GPT, BERT, and LLaMA, improving AI-powered chatbots, translation services, and content generation.

5. Climate Science and Weather Prediction

Meteorologists and researchers use NVIDIA DGX to develop AI models for climate forecasting, extreme weather prediction, and environmental analysis.

Challenges and Considerations

Despite its advanced capabilities, organizations considering NVIDIA DGX should be aware of potential challenges:

- High Initial Cost: DGX systems require significant upfront investment, making them more suitable for enterprises and research institutions with dedicated AI budgets.

- Cooling and Power Requirements: DGX SuperPOD and multi-GPU systems demand adequate power and cooling infrastructure.

- Expertise Required: Leveraging NVIDIA DGX to its full potential requires skilled AI engineers and data scientists.

Conclusion

The NVIDIA DGX platform is a game-changer in AI and HPC, offering unparalleled computational power, scalability, and efficiency. Whether for deep learning research, autonomous vehicle development, or enterprise AI applications, NVIDIA DGX provides an optimal solution for tackling complex AI challenges. While the investment may be significant, the long-term benefits in terms of performance, efficiency, and innovation make it a worthwhile choice for organizations serious about AI. As AI continues to evolve, NVIDIA DGX remains at the forefront, empowering businesses and researchers to push the boundaries of artificial intelligence.

you may also like